Good Morning Friends,

This post is the continuation of Auto Growth Part 1.

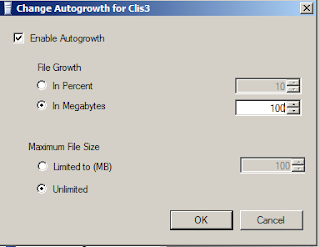

Part 1 we have seen the options available of Auto Growth in SQL Database and the parameters available. Now here we will see how to select Parameters.

For "Parameter 2" easily we can set to "Unlimited" because no one will stop the growth of there Database. So it is set to "Unlimited" (It will grow until your disk get full or in simple terms you can say limited to your Drive size).

For "Parameter 1" best I will select "Growth in MB". Following are the cases I'll try to explain while selecting this option:

Case 1:

1. Suppose I've a Database Clis3, which has one Data and one Log File with size is 50 and 10 GB respectively.

2. Growth for Log file is set to 100 MB. Recovery model in Full recovery mode.

3. Suppose there is a Bulk transaction in the Clis3 Database. Obviously the Log file will capture each and every transaction. SO the log file will start growing.

4. First it will start utilizing all the available VLF (Virtual Log File) up to 10 GB. Once it is full it will add 100 MB to Log file to write the transactions.

5. Now total Log size is 10.10 GB.

6. Once this 100 MB is also full it will add 100 MB more and so on. It will be done until the transaction is completed.

7. So now total Log size is 10.20 GB.

Here SQL Server is adding a defined amount of space (i.e. 100 MB) to the log file. This will indirectly control the growth of Log file.

Case 2:

1. Suppose for same Database Clis3, which has one Data and one Log File with size is 50 & 10 GB respectively.

2. Growth for Log file is set to 10 percent. Recovery model in Full recovery mode.

3. Suppose there is a Bulk transaction in the Clis3 Database. Obviously the Log file will capture each and every transaction. SO the log file will start growing.

4. First it will start utilizing all the available VLF up to 10 GB. Once it get full it will add 1024 MB (10% of 10 GB) to Log file to write the transactions.

5. Now total Log size is 11 GB.

6. Once this 1 GB is also full it will add 1127 MB more (10% of 11 GB) and so on. It will be done until the transaction is completed.

7. So now total Log size is 12.1 GB.

Here SQL Server is adding 10 percent of the current Log Size. Which means the size will vary depending upon the current Log File.

From the above image ideally for large Databases we can set for Auto Growth.

Case study for the above two cases:

1. Log File in Case 1 will have 10.20 GB at the end whereas;

2. Case 2 will have 12.10 GB at the end.

3. That means Log file will utilize 1.9 GB of unnecessary space from the disk which is unwanted.

4. Hence proves for larger Database it is good to define "Parameter 1" to "Growth in MB's" as it will avoid unnecessary utilization of disk space.

This post is the continuation of Auto Growth Part 1.

Part 1 we have seen the options available of Auto Growth in SQL Database and the parameters available. Now here we will see how to select Parameters.

For "Parameter 2" easily we can set to "Unlimited" because no one will stop the growth of there Database. So it is set to "Unlimited" (It will grow until your disk get full or in simple terms you can say limited to your Drive size).

For "Parameter 1" best I will select "Growth in MB". Following are the cases I'll try to explain while selecting this option:

Case 1:

1. Suppose I've a Database Clis3, which has one Data and one Log File with size is 50 and 10 GB respectively.

2. Growth for Log file is set to 100 MB. Recovery model in Full recovery mode.

3. Suppose there is a Bulk transaction in the Clis3 Database. Obviously the Log file will capture each and every transaction. SO the log file will start growing.

4. First it will start utilizing all the available VLF (Virtual Log File) up to 10 GB. Once it is full it will add 100 MB to Log file to write the transactions.

5. Now total Log size is 10.10 GB.

6. Once this 100 MB is also full it will add 100 MB more and so on. It will be done until the transaction is completed.

7. So now total Log size is 10.20 GB.

Here SQL Server is adding a defined amount of space (i.e. 100 MB) to the log file. This will indirectly control the growth of Log file.

Case 2:

1. Suppose for same Database Clis3, which has one Data and one Log File with size is 50 & 10 GB respectively.

2. Growth for Log file is set to 10 percent. Recovery model in Full recovery mode.

3. Suppose there is a Bulk transaction in the Clis3 Database. Obviously the Log file will capture each and every transaction. SO the log file will start growing.

4. First it will start utilizing all the available VLF up to 10 GB. Once it get full it will add 1024 MB (10% of 10 GB) to Log file to write the transactions.

5. Now total Log size is 11 GB.

6. Once this 1 GB is also full it will add 1127 MB more (10% of 11 GB) and so on. It will be done until the transaction is completed.

7. So now total Log size is 12.1 GB.

Here SQL Server is adding 10 percent of the current Log Size. Which means the size will vary depending upon the current Log File.

|

| Ideal Setting for Auto Growth |

Case study for the above two cases:

1. Log File in Case 1 will have 10.20 GB at the end whereas;

2. Case 2 will have 12.10 GB at the end.

3. That means Log file will utilize 1.9 GB of unnecessary space from the disk which is unwanted.

4. Hence proves for larger Database it is good to define "Parameter 1" to "Growth in MB's" as it will avoid unnecessary utilization of disk space.

Keep in touch... and Happy Learning....

I appreciate and thank you for reading this post. :)